Gitlab Runner Python

Aug 20, 2014 many answers above are close, but they get username syntax for deploy tokens incorrect. There are other types of tokens, but the deploy token is what gitlab offers (circa 2020+ at least) per repo to allow customized access, including read-only. Java, PHP, Go, Python or even LaTeX, no limit here! In this blog post we review a few examples for the Python programming language. In GitLab, the different tests are called jobs. These jobs are executed by a gitlab-runner, that can be installed on the same server as you main GitLab.

- Gitlab Runner Python Online

- Gitlab Runner Python Tutorial

- Gitlab Runner Python Example

- Gitlab Ci Yaml File

Mar 6, 2019 by Thibault Debatty | 12792 views

Whatever programming language your are using for your project, GitLab continuous integration system (gitlab-ci) is a fantastic tool that allows you to automatically run tests when code is pushed to your repository. Java, PHP, Go, Python or even LaTeX, no limit here!In this blog post we review a few examples for the Python programming language.

Gitlab Runner Python Online

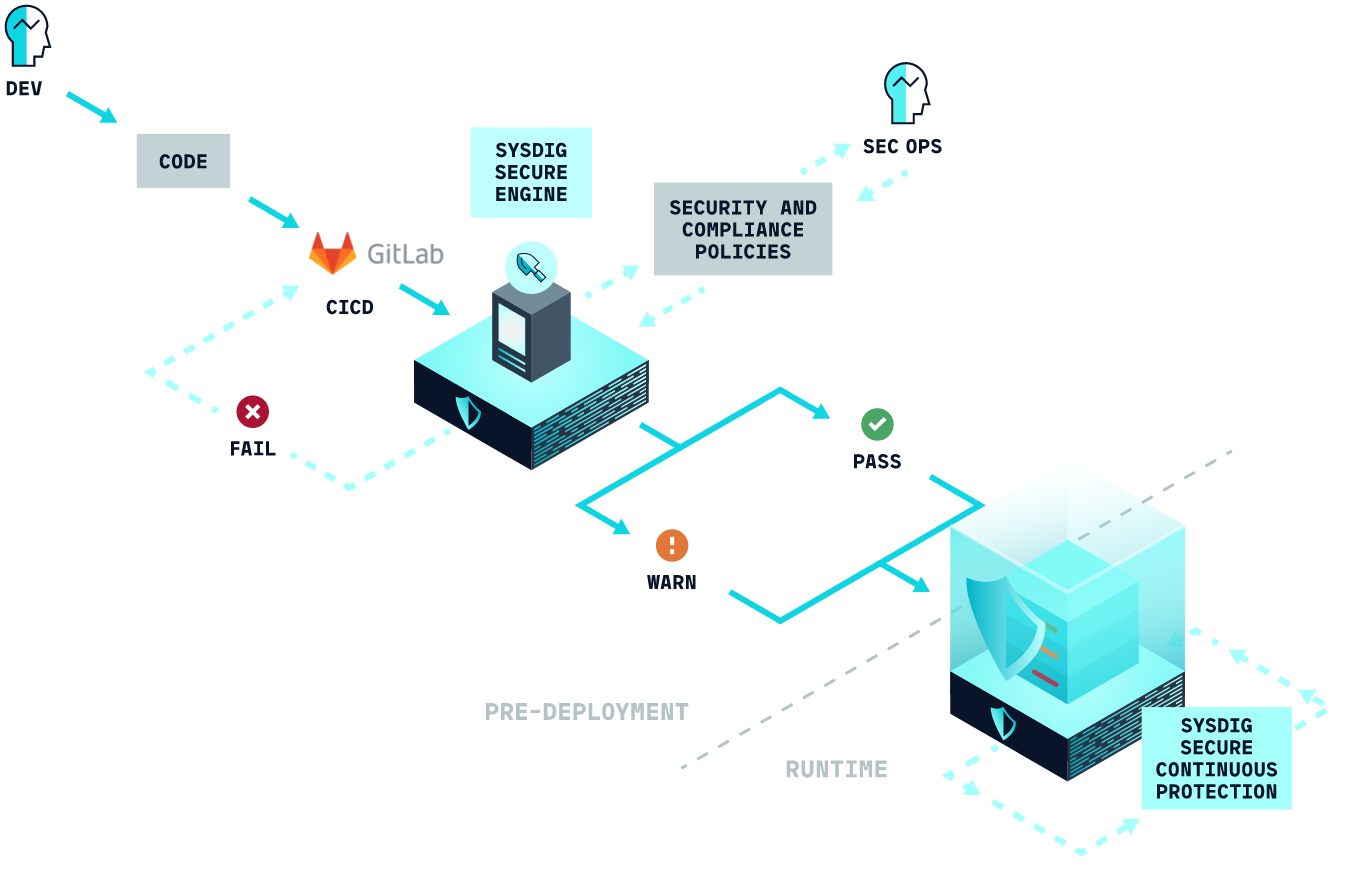

In GitLab, the different tests are called jobs. These jobs are executed by a gitlab-runner, that can be installed on the same server as you main GitLab, or on one or multiple separate servers.

Different options exist to run the jobs: using VirtualBox virtual machines, using Docker containers or using no virtualization at all. Most administrators configure gitlab-runner to run jobs using Docker images. This is also what we will assume for the remainder of this post.

PyLint

Pylint is a powerful tool that performs static code analysis and checks that your code is written following Python coding standards (called PEP8).

To automatically check your code when you push to your repository, add a file called .gitlab-ci.yml at the root of your project with following content:

test:pylint is simply the name of the job. You can choose whatever you want. The rest of the code indicates that gitlab-runner should use the docker image python:3.6, and run the mentioned commands.

When using some external classes (like sockets), PyLint may complain that the used method does not exist, although the method does actually exist. You can ignore these errors by appending --ignored-classes=... to the pylint command line.

Gitlab Runner Python Tutorial

You can also specify a directory (instead of *.py), but it must be a Python module and include __init__.py.

Good to know: Pylint is shipped with Pyreverse which creates UML diagrams from python code.

Unit testing wity PyTest

PyTest is a framework designed to help you test your Python code. Here is an example of a test:

To run these tests automatically when you push code to your repository, add the following job to your .gitlab-ci.yml.

pytest will automatically discover all test files in your project (all files named test_*.py or *_test.py) and execute them.

Testing multiple versions of Python

One of the main benefits of automatic testing with gitlab-ci is that your can test multiple versions of Python at the same time. There is however one caveat: if you plan to test your code with both Python 2 and Python 3, you will at least need to disable the superfluous-parens test in Python 2 (for the print statement, that became a function in Python 3). Here is a full example of .gitlab-ci.yml :

As I have been arguing for years that the python ecosystem urgently needs to mature its project dependency management tools, I am a very big fan of python poetry.Since it got very stable to use, I started transitioning my projects to work with a pyproject.toml instead of working with conda or setup.py (the latter I perceive as pretty complicated).It is important to note, that it always depends very strongly on the particular use case to decide for which tools to go with.Confronted with lots of computer science / programming students at work, I notice a huge gap of understanding of software engineering principles.Especially in the realm of Data Science it is very common to do grubby prototyping (not to confuse with reasonable prototyping) and mix lots of tools and principles.My reduced recommendation is then to separate project concerns into e.g. implementation and data analysis.If a tool or library is part of the implementation, it totally makes sense to go with s.th. like poetry, while visual analytics often makes more sense in an interactive jupyter notebook – especially at times when the learning curve will still be very high.In my eyes, many engineers are afraid of separating projects (such as an experimental thesis) into parts.

Anyway, when does it make sense to use poetry and when does it make sense to use e.g. conda or pip?It is important to notice, that all of those tools serve slightly different purposes.The short answer, however, is still:If you are designing a library/tool and want to publish it (even if just for yourself) poetry is the way to go provided you have no heavy dependencies which require you to stick to setuptools.To quickly create an experiment or if you need system dependencies conda still has its right to exist in the python world.In my opinion, pip should simply be avoided at all cost and can be wrapped behind poetry and conda.

Jump to:

Experiments usually require heavy libraries to hide complexity underhood.This makes experiment design well-arranged, clearly laid-out and decoupled from underlying technical changes.In Machine Learning / Data Science such libraries in python usually include e.g. numpy, pandas, matplotlib, graphviz, networkx, scikit-learn, tensorflow, pytorch or simpy to just mention a few.Experiments should be fully reproducible which is still not as easy as one might think.On a dependency management level, conda suits this task the best, as it is able to fixate not only python dependencies to very distinct versions, but also system dependencies.This fixation on very distinct versions is a major difference to setting up a project such as a library or tool.Libraries or tools should have very loose version restrictions to make them fit into as many other projects as possible.

Steps:

- create a new directory and initialize a git repository

- create an environment.yml file

- create an environment auto-completion file

- create a conda environment given the environment specification

A short code for your bash:EXP='exp00-experimenttitle' && mkdir $EXP && cd $EXP && echo 'This file is for conda env name auto-completion' >> 'sur-'$EXP && printf 'name: sur-'$EXP'nchannels:n- defaultsndependencies:n- python>=3.8' >> environment.yml && git init && conda env create -f environment.yml && conda activate 'sur-'$EXP

To make your environment specification very restrictive on particular dependency versions, you can use conda env export to generate a specification from versions resolved by your environment.However, this also includes indirect dependencies – something which might not be desired.

sur-exp00-experimenttitle:I usually create auto-completion files for my conda environments, as having dozens of projects it can get quite tricky to remember names.The prefix “sur-” (for surrounding) is a custom choice and a prefix I seldomly encounter, thus making the auto-completion quite easy to work.

environment.yml:Usually I do heavy computations based on given libraries (sometimes also with own ones).This requires not much engineering except for persisting data.Analyzing this data, then, should be decoupled from computation code, e.g. by loading pre-processed data from CSV or JSON files in which results have been persisted.This allows you to switch between computation results and to fast execute statistical analysis and visualizations.Visual analytics then usually involves following packages:

Short hand code: PROJ='projecttitle' && mkdir $PROJ && cd $PROJ && git init && poetry init -n --name $PROJ && poetry installA purely python-based project does not have any more dependencies than that.You can add packages, lock versions and manage your virtual environment completely with poetry.

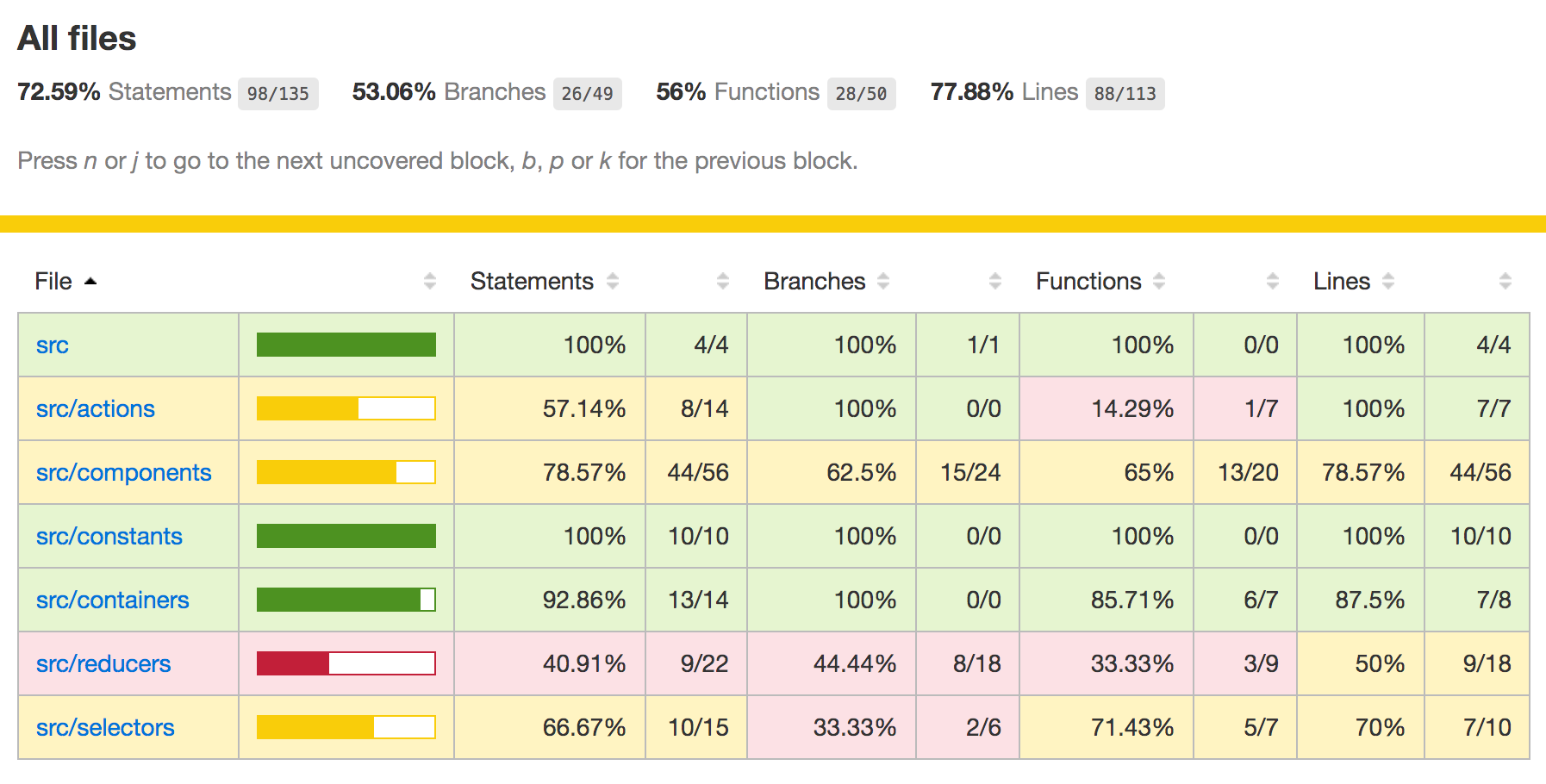

It took me some time to get a continuous integration with pytest working.My result is inspired by a .gitlab-ci.yml of github.com/pawamoy.To avoid unnecessary commits to your repository you can install gitlab-runner and test your configuration with e.g. gitlab-runner exec docker test-python3.8 (in which the last name specifies the job name from your .gitlab-ci.yml).Install gitlab-runner with at least version 12.3 or up:

To reproduce the poetry virtual environment, you have to make sure that your base image has pyhthon and pip available.This is why the base images are e.g. based on python:3.6, otherwise you would need to add package installations for python3-dev and python3-pip:

Gitlab Runner Python Example

Then you can install poetry e.g. from pip: pip install poetry (or use the official recommended version curl -sSL https://raw.githubusercontent.com/python-poetry/poetry/master/get-poetry.py | python).As soon as you installed poetry as a global command within your image, you can use it to install your virtual environment for your particular project.To make packages such as pytest available, I found two tricks helpful:

- installing the virtual environment within the project folder.This can be cone by

poetry config virtualenvs.in-project true- a config option for poetry. - calling pytest through poetry, which resolved the package in the virtual environment by

poetry run pytest tests/.

Templating in the gitlab-ci-configuration helps furthermore to test different base images without duplicating configuration descriptions.Also note, that custom stage names have been defined (default ones are s.th. like test and deploy).

Gitlab Ci Yaml File

The full content of my .gitlab-ci.yml: